Quantum Error Correction Protecting Quantum Information

Quantum error correction, a crucial field in quantum computing, tackles the fragility of quantum information. Unlike classical bits, qubits are susceptible to noise and decoherence, leading to errors that can derail computations. This necessitates sophisticated techniques to safeguard quantum information, ensuring the accuracy and reliability of quantum algorithms. Understanding these techniques is paramount for building practical, large-scale quantum computers.

The development of quantum error correction has been a fascinating journey, spurred by the inherent challenges of manipulating quantum systems. Early approaches focused on simple codes, but as quantum computing matured, more complex and robust methods emerged, including stabilizer codes, topological codes, and concatenated codes, each with its own strengths and weaknesses depending on the specific quantum computing architecture being used.

Introduction to Quantum Error Correction

Quantum computation, while promising immense computational power, faces a significant hurdle: the fragility of quantum states. Unlike classical bits which are robust, qubits are susceptible to various forms of noise and decoherence, leading to errors that corrupt computational results. Quantum error correction (QEC) is a crucial field dedicated to mitigating these errors and ensuring the reliable execution of quantum algorithms.

It’s the cornerstone of building fault-tolerant quantum computers.Quantum error correction leverages the principles of quantum mechanics to detect and correct errors affecting qubits. The core idea is to encode a single logical qubit into multiple physical qubits, creating redundancy. This redundancy allows the detection of errors by comparing the states of the physical qubits and applying corrective operations to restore the original logical qubit state.

Crucially, this process is done without directly measuring the qubits, which would destroy their delicate quantum properties. Instead, sophisticated quantum operations are used to identify and counteract the effects of noise.

Historical Development of Quantum Error Correction

The theoretical foundations of QEC were laid in the early 1990s. Peter Shor’s groundbreaking work in 1995 presented the first quantum error-correcting code, capable of correcting single-qubit errors. This was followed by the development of other important codes, such as the Steane code and the surface code, each offering different levels of error correction capabilities and suitability for various architectures.

The field has progressed significantly since then, moving from theoretical proposals to experimental demonstrations of error correction in various quantum computing platforms, including superconducting circuits, trapped ions, and photonic systems. Ongoing research continues to push the boundaries of QEC, aiming to develop more efficient and robust codes to address the challenges of building large-scale fault-tolerant quantum computers.

Types of Quantum Errors and Their Impact

Quantum systems are susceptible to various types of errors that can disrupt computation. These errors can broadly be categorized into bit-flip errors, phase-flip errors, and combined bit-flip and phase-flip errors.A bit-flip error changes the state of a qubit from |0⟩ to |1⟩ or vice-versa. Imagine a qubit representing the state |0⟩, analogous to a classical bit being 0.

A bit-flip error would change this to |1⟩, akin to the classical bit flipping to 1. This is similar to a classical bit flip error, but the consequences are more profound in the quantum realm due to superposition and entanglement.A phase-flip error affects the phase of a qubit. For instance, a qubit in a superposition state α|0⟩ + β|1⟩ might undergo a phase-flip error, changing its state to α|0⟩β|1⟩.

This subtle change can significantly impact the outcome of quantum computations, leading to incorrect results. Phase-flip errors are unique to quantum systems and don’t have a direct classical analogue.Combined bit-flip and phase-flip errors represent the most general type of error. These errors can simultaneously affect both the amplitude and phase of a qubit’s state, making them the most challenging to correct.

Such errors could manifest as a combination of both bit-flip and phase-flip, corrupting both the amplitude and phase information simultaneously, leading to complex error patterns that require more sophisticated QEC techniques to handle. The impact of these errors is a degradation of the fidelity of the quantum computation, potentially leading to incorrect results or a complete failure of the computation.

The severity of the impact depends on the error rate and the type of algorithm being executed. For example, a single error in a sensitive quantum algorithm could lead to significant deviations from the expected output.

Types of Quantum Error Correction Codes

Quantum error correction is crucial for building fault-tolerant quantum computers. Several different types of quantum error correcting codes exist, each with its own strengths and weaknesses, making them suitable for different applications and hardware platforms. The choice of code depends heavily on the specific needs of the quantum computer being built, considering factors such as the type and rate of errors, the available resources, and the overall architecture.

The three major classes of quantum error correction codes are stabilizer codes, topological codes, and concatenated codes. These codes differ significantly in their structure, encoding methods, and error correction capabilities. Understanding these differences is essential for designing effective quantum computing systems.

Quantum error correction is crucial for building reliable quantum computers, as these systems are highly susceptible to noise. The accuracy needed for complex calculations is only achievable with robust error correction methods. This is especially important for applications like those discussed in this article on How quantum AI will revolutionize financial modeling and prediction , where even small errors could have significant financial consequences.

Therefore, further advancements in quantum error correction are essential for realizing the full potential of quantum AI in finance.

Stabilizer Codes

Stabilizer codes form a large and versatile family of quantum error correction codes. They are defined by a set of commuting Pauli operators that stabilize the encoded quantum states. This mathematical framework allows for systematic construction and analysis of codes with varying parameters, such as the number of qubits protected and the types of errors they can correct. The surface code, a prominent example of a stabilizer code, is widely studied for its potential use in fault-tolerant quantum computation due to its relatively low overhead and ability to correct both bit-flip and phase-flip errors.

Other examples include the Steane code and the Shor code, each offering different levels of error correction capability and resource requirements. The encoding and decoding procedures for stabilizer codes are generally well-understood and can be implemented efficiently using quantum circuits.

Topological Codes

Topological codes leverage the concept of topological order to protect quantum information. Instead of relying on explicit error correction operations, these codes embed the encoded information into the topology of a physical system, making them inherently robust against certain types of errors. The most well-known example is the toric code, which encodes qubits onto the edges of a two-dimensional lattice.

Quantum error correction is crucial for building reliable quantum computers, as qubits are incredibly sensitive to noise. This is a major hurdle addressed in the ongoing Quantum AI hardware development and its challenges in scalability , where scaling up the number of qubits while maintaining coherence is a significant problem. Ultimately, improved error correction techniques are key to unlocking the full potential of quantum AI.

Errors manifest as excitations that propagate through the lattice, and their detection and correction are based on measuring non-local observables. The inherent fault tolerance of topological codes stems from their ability to detect and correct errors even if they affect multiple qubits simultaneously. However, their implementation often requires more qubits and more complex measurements compared to stabilizer codes.

This increased complexity can present challenges in practical implementations.

Concatenated Codes

Concatenated codes are constructed by recursively applying a smaller, simpler code multiple times. This hierarchical structure allows for building codes with increasingly higher error correction thresholds. The idea is to encode the information using an inner code, then encode the result of the inner encoding using an outer code, and so on. This approach offers a way to combine the strengths of different codes and potentially achieve better performance than using a single, more complex code.

For instance, one might use a simple code as the inner code to handle frequent, small errors, and a more powerful code as the outer code to address less frequent but more severe errors. The primary advantage is the potential for achieving higher thresholds for fault tolerance, but this comes at the cost of increased overhead in terms of both qubits and operations.

The complexity of encoding and decoding grows significantly with the number of concatenation levels.

Performance and Complexity Comparison

Comparing the performance and complexity of these codes directly is difficult, as it depends on various factors including the specific code parameters, the noise model, and the hardware platform. However, some general observations can be made. Stabilizer codes generally offer a good balance between performance and complexity, making them a popular choice for near-term applications. Topological codes are known for their high potential error correction thresholds but can be more complex to implement.

Concatenated codes can achieve high thresholds but suffer from increased overhead. The choice of the best code depends on the specific application and constraints of the quantum computing architecture. For example, a system with high qubit connectivity might be better suited for stabilizer codes, while a system with inherent topological properties might be more suitable for topological codes.

Quantum Error Correction Techniques

Quantum error correction is crucial for building fault-tolerant quantum computers. These techniques aim to protect delicate quantum information from decoherence and noise, ensuring reliable computation. The process involves encoding quantum information redundantly, detecting errors, and correcting them without directly measuring the fragile quantum state.

Encoding quantum information using quantum error correction codes involves representing a logical qubit using multiple physical qubits. This redundancy allows the detection and correction of errors affecting some of the physical qubits without disturbing the encoded logical qubit. The specific encoding scheme depends on the chosen quantum error correction code (e.g., Shor code, Steane code, surface code). For instance, the simplest approach might involve encoding a single logical qubit into three physical qubits, where the majority vote determines the logical qubit’s state.

Encoding Quantum Information

The process of encoding a qubit using a quantum error correction code involves mapping the state of a single logical qubit onto multiple physical qubits. This mapping is carefully chosen such that certain errors can be detected and corrected. This typically involves applying specific quantum gates to the physical qubits according to the chosen code’s encoding circuit. For example, the Steane code encodes a single logical qubit into seven physical qubits, allowing for the correction of single-qubit errors.

The encoding operation creates a highly entangled state between the physical qubits, which carries the information of the logical qubit in a protected manner.

Syndrome Measurement and Error Correction

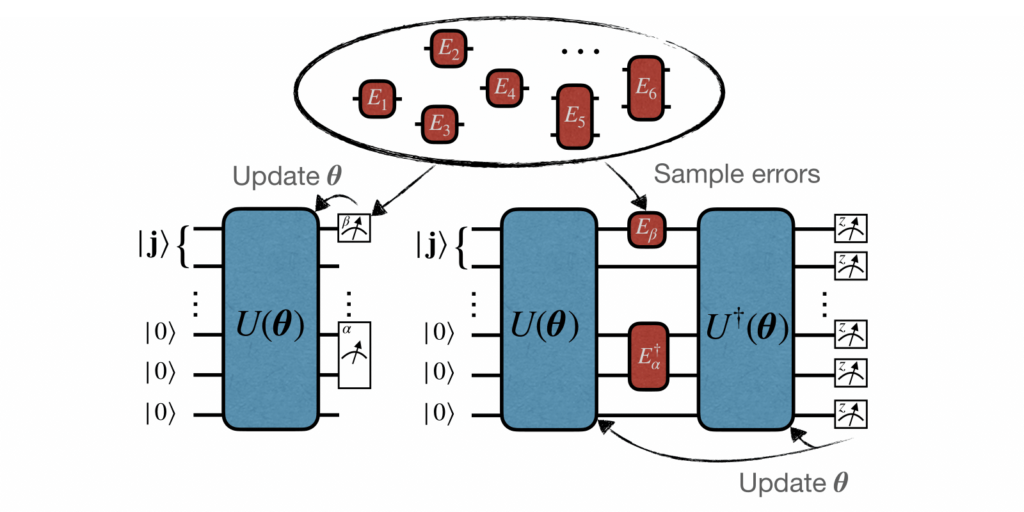

After encoding, the system is subjected to noise, which might introduce errors into the physical qubits. Syndrome measurement is a non-destructive process designed to identify the type and location of these errors without directly measuring the fragile encoded quantum information. This is achieved by performing specific measurements on a subset of the physical qubits, the results of which form the syndrome.

The syndrome acts as a pointer indicating the error’s location and type. Based on the syndrome, a correction operation is applied to the physical qubits to undo the effects of the noise. This correction operation is also carefully chosen to preserve the integrity of the logical qubit’s state.

Quantum error correction is crucial for the reliable operation of quantum computers, as they’re incredibly sensitive to noise. This becomes especially important when considering the immense computational power needed to tackle problems like climate change; check out this article on Quantum AI’s potential to solve complex climate change modeling problems to see why. Ultimately, advancements in quantum error correction will determine how effectively we can harness the power of quantum computing for such vital applications.

Quantum Error Correction Protocol Workflow

The following table Artikels the steps involved in a typical quantum error correction protocol.

| Step | Input | Output | Potential Errors |

|---|---|---|---|

| Encoding | Logical qubit state, physical qubits | Encoded logical qubit state across multiple physical qubits | Errors in gate implementation during encoding |

| Noise Exposure | Encoded logical qubit state | Encoded logical qubit state with potential errors | Qubit decoherence, bit-flip errors, phase-flip errors |

| Syndrome Measurement | Encoded logical qubit state | Syndrome indicating error location and type | Errors in measurement process |

| Error Correction | Syndrome, encoded logical qubit state | Corrected encoded logical qubit state | Errors in the correction operation |

Fault-Tolerant Quantum Computation

Fault-tolerant quantum computation is the holy grail of quantum computing, aiming to build quantum computers that can perform computations reliably despite the inherent fragility of quantum bits (qubits). Unlike classical bits, qubits are susceptible to errors from various sources like noise in the environment or imperfections in the hardware. Fault tolerance leverages quantum error correction to mitigate these errors and achieve reliable computation.

Quantum error correction is crucial for building stable quantum computers, as these systems are incredibly sensitive to noise. The processing power needed for complex quantum error correction algorithms could benefit greatly from the low-latency, high-bandwidth characteristics of Edge computing and 5G , allowing for faster processing and improved reliability. This integration could significantly accelerate the development and practical application of fault-tolerant quantum computing.

Essentially, it’s about building a system robust enough to perform complex calculations even when individual components aren’t perfect.The relationship between fault tolerance and quantum error correction is symbiotic. Quantum error correction provides the tools to detect and correct errors in individual qubits, while fault tolerance uses these tools to design and implement quantum circuits that are resilient to errors at a larger scale.

Without robust error correction, the accumulation of errors would quickly overwhelm any quantum computation, rendering the results meaningless. Fault tolerance ensures that the probability of a computation failing due to errors remains acceptably low, even for long and complex calculations.

Requirements for Fault-Tolerant Quantum Computers

Building fault-tolerant quantum computers demands meeting several stringent requirements. These include achieving high-fidelity qubit operations, implementing efficient quantum error correction codes, and designing quantum circuits that are inherently robust to errors. The threshold theorem, a cornerstone of fault-tolerant quantum computation, states that if the error rate of individual gates and measurements is below a certain threshold (typically estimated to be around 1%), then arbitrarily long computations can be performed with arbitrarily low error probability.

This threshold depends on the specific quantum error correction code and the architecture used. Meeting this threshold requires significant advancements in hardware and software. For example, maintaining qubit coherence times long enough to perform error correction is crucial. Additionally, the overhead in terms of the number of physical qubits required to implement a single logical qubit protected by error correction is substantial, often reaching several hundreds or thousands.

Examples of Fault-Tolerant Quantum Algorithms

While fully fault-tolerant quantum computers are still under development, researchers have made progress in designing and simulating fault-tolerant algorithms. One example is Shor’s algorithm for factoring large numbers, a task considered computationally intractable for classical computers. Implementing Shor’s algorithm fault-tolerantly requires protecting the qubits used in the algorithm from errors during the computation. This involves encoding the qubits using a quantum error correction code and applying fault-tolerant quantum gates that preserve the encoded information.

The complexity of this implementation is significantly higher than a non-fault-tolerant version, but it’s necessary to ensure reliable results for large numbers. Another example is quantum simulation, where quantum computers can simulate the behavior of quantum systems. Fault-tolerant techniques allow for simulating larger and more complex quantum systems than would be possible without error correction, enabling breakthroughs in fields like materials science and drug discovery.

These algorithms rely heavily on the ability to perform numerous quantum gates with high fidelity, which is only achievable with fault-tolerant techniques. Simulations of these fault-tolerant algorithms provide crucial insights into their feasibility and pave the way for their implementation on future hardware.

Challenges and Future Directions in Quantum Error Correction

Source: quantum-journal.org

Quantum error correction (QEC) is crucial for building fault-tolerant quantum computers, but significant hurdles remain before widespread implementation. The fragility of quantum states, coupled with the inherent difficulty of manipulating them, presents a complex engineering and theoretical challenge. Overcoming these challenges is vital for realizing the full potential of quantum computing.Developing and implementing efficient quantum error correction schemes faces several key obstacles.

These obstacles span theoretical limitations, technological constraints, and the sheer complexity of the systems involved. Addressing these issues requires a multi-pronged approach combining theoretical advancements, materials science breakthroughs, and innovative engineering solutions.

Major Challenges in Quantum Error Correction

The pursuit of effective QEC faces numerous challenges. One significant hurdle is the overhead required for error correction. Current schemes necessitate a substantial increase in the number of qubits needed to protect a smaller number of logical qubits, significantly impacting scalability. Furthermore, the complexity of the error correction protocols themselves adds to the computational burden. Another challenge lies in the development of robust and efficient quantum gates that are themselves resistant to errors.

Imperfect gate operations contribute to the overall error rate, undermining the effectiveness of the correction process. Finally, the decoherence of qubits, their susceptibility to noise from the environment, remains a persistent and significant challenge. Different types of noise, such as bit-flip errors and phase errors, demand different mitigation strategies, adding to the complexity. Maintaining coherence while performing operations is crucial for preserving quantum information.

Potential of Novel Quantum Error Correction Techniques

While existing QEC techniques have made significant progress, research into novel approaches holds the key to unlocking more efficient and robust error correction. Topological quantum computation, for instance, offers a promising avenue by leveraging the properties of topological states of matter to protect quantum information against errors. These systems inherently possess a higher degree of resilience to noise compared to traditional approaches.

Another area of active research is the development of quantum error correction codes that are optimized for specific noise models. Tailoring the code to the dominant noise sources in a given system can significantly improve performance. This approach moves away from the assumption of a general noise model towards more realistic and effective solutions. Finally, hybrid approaches combining different QEC techniques are being explored to leverage the strengths of each method and mitigate their weaknesses.

Potential Research Areas for Improving Quantum Error Correction

The following research areas are crucial for advancing the field of quantum error correction:

- Developing new quantum error-correcting codes with lower overhead and improved performance.

- Improving the efficiency and robustness of quantum gates used in error correction circuits.

- Exploring novel physical implementations of qubits that are less susceptible to decoherence.

- Developing advanced techniques for characterizing and mitigating different types of quantum noise.

- Investigating the potential of topological quantum computation and other fault-tolerant architectures.

- Developing hybrid quantum error correction schemes that combine the strengths of different approaches.

- Creating efficient algorithms for decoding quantum error-correcting codes.

- Developing new theoretical frameworks for understanding and modeling quantum noise.

Quantum Error Correction in Different Architectures

Implementing quantum error correction (QEC) is a significant hurdle in building large-scale, fault-tolerant quantum computers. The choice of physical architecture significantly impacts the feasibility and efficiency of QEC. Different architectures present unique challenges and advantages regarding qubit coherence times, control fidelity, and the complexity of implementing error correction protocols. This section compares and contrasts QEC implementation across three leading architectures: superconducting, trapped ion, and photonic quantum computers.

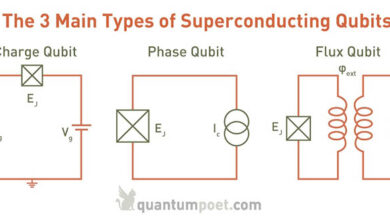

Superconducting Qubits and Quantum Error Correction

Superconducting qubits are currently the most mature technology for building quantum computers, with several companies developing large-scale systems. These qubits leverage the quantum properties of superconducting circuits, typically relying on Josephson junctions to create and manipulate qubits. The primary challenge in implementing QEC with superconducting qubits lies in their relatively short coherence times and susceptibility to noise from various sources, including electromagnetic interference and material imperfections.

However, the scalability and relatively high control fidelity of superconducting qubits make them a promising platform for implementing surface codes, a leading QEC scheme. Surface codes require a large number of physical qubits to encode a single logical qubit, making the scalability of the superconducting architecture crucial. Recent advancements in fabrication techniques and qubit design are steadily improving coherence times and control fidelity, paving the way for more robust QEC.

Trapped Ion Qubits and Quantum Error Correction

Trapped ion qubits utilize individual ions trapped in electromagnetic fields. The ions’ internal energy levels serve as qubits, and laser pulses manipulate their quantum states. Trapped ion systems generally exhibit longer coherence times compared to superconducting qubits, offering an advantage for QEC. Furthermore, the high control fidelity achieved in trapped ion systems allows for precise implementation of quantum gates and error correction protocols.

However, scalability remains a major challenge for trapped ion systems. Building large-scale arrays of individually trapped ions requires sophisticated control electronics and vacuum systems. While various QEC techniques, including stabilizer codes, can be implemented, the scalability limitations currently restrict the size of the encoded logical qubits.

Photonic Qubits and Quantum Error Correction

Photonic qubits use photons, particles of light, as carriers of quantum information. Their inherent stability and resistance to certain types of noise make them attractive for QEC. Photonic qubits can propagate over long distances with minimal decoherence, making them suitable for distributed quantum computing architectures. However, implementing QEC with photonic qubits presents unique challenges. Creating high-fidelity quantum gates between photons requires complex optical components and precise control.

Furthermore, the probabilistic nature of photon-photon interactions necessitates the use of specific QEC codes, such as those based on linear optics. Despite these challenges, the potential for fault-tolerant quantum communication and long-distance entanglement generation makes photonic QEC a significant area of research.

Comparative Table of Quantum Error Correction Methods Across Architectures

The following table summarizes the performance of different quantum error correction methods across various architectures. Note that the data represents current progress and is subject to rapid advancement. Values may vary significantly depending on specific implementations and experimental conditions.

| Architecture | Error Correction Method | Logical Qubit Fidelity | Challenges |

|---|---|---|---|

| Superconducting | Surface Code | ~99.9% (projected, varies widely) | Short coherence times, crosstalk |

| Trapped Ion | Stabilizer Codes | ~99.5% (experimental) | Scalability, individual qubit control complexity |

| Photonic | Linear Optics Codes | ~98% (experimental) | Low gate fidelity, probabilistic interactions |

Illustrative Example: Surface Code

The surface code is a prominent example of a topological quantum error correction code, renowned for its potential scalability and robustness against noise. Its two-dimensional lattice structure and clever encoding strategy allow for efficient detection and correction of both bit-flip and phase-flip errors. Understanding its operation involves visualizing its geometrical representation, the process of syndrome extraction, and the subsequent decoding to recover the encoded quantum information.The surface code encodes a logical qubit using a large number of physical qubits arranged on a two-dimensional grid.

Each physical qubit is represented by a node on this grid, and the logical qubit’s state is encoded in a non-local manner across many of these physical qubits. This redundancy provides the code’s resilience to errors. The specific encoding scheme depends on the chosen boundary conditions of the grid and the size of the code, but the fundamental principle remains the same: the information is distributed to make it resistant to localized errors.

Surface Code Geometry and Error Correction

The surface code’s geometry is crucial to its error-correcting capabilities. Imagine a square lattice of qubits. Each qubit is connected to its four nearest neighbors by stabilizer measurements. These measurements, which are non-destructive, check for errors without disturbing the encoded information. Stabilizer generators are arranged in a pattern of ‘X’ and ‘Z’ type measurements forming plaquettes (small squares) across the lattice.

An X-type measurement checks for bit-flip errors on the qubits around the plaquette, while a Z-type measurement checks for phase-flip errors. The arrangement ensures that a single bit-flip or phase-flip error affects only a limited number of stabilizer measurements. The larger the lattice, the more qubits are involved in encoding a single logical qubit, thus improving the code’s resilience to errors.

Syndrome Extraction and Error Correction, Quantum error correction

Syndrome extraction is the process of performing the stabilizer measurements on the lattice. Let’s visualize a simplified 5×5 grid. Imagine each qubit represented by a small circle. Each circle is connected to its neighbors by lines representing the stabilizer measurements. X-type measurements are represented by red squares surrounding four qubits, while Z-type measurements are represented by blue squares surrounding four other qubits, with the pattern alternating.

The outcome of each stabilizer measurement (0 or 1) constitutes the syndrome. A syndrome of all zeros indicates no errors; a non-zero syndrome indicates the presence of one or more errors.The syndrome provides information about the location and type of errors. For example, if a single bit-flip error occurs on a qubit, it will change the outcome of the two adjacent stabilizer measurements (one red and one blue square).

This pattern of changes in the syndrome allows us to pinpoint the location and type of the error. A sophisticated decoding algorithm then uses this syndrome information to determine the most likely error configuration and correct it. This algorithm often involves finding the minimum-weight matching in a graph representing the syndrome. The decoding algorithm is designed to minimize the probability of miscorrection, which is crucial for maintaining the integrity of the encoded information.

Last Point

In conclusion, the quest for robust quantum error correction is a pivotal challenge in realizing the full potential of quantum computing. While significant progress has been made, developing efficient and scalable error correction schemes remains a key area of research. Overcoming these hurdles will pave the way for fault-tolerant quantum computers capable of tackling problems currently intractable for even the most powerful classical computers.

The journey towards reliable quantum computation is an ongoing adventure, with ongoing innovations shaping the future of this transformative technology.

FAQ

What are the main sources of quantum errors?

Quantum errors stem from interactions with the environment (decoherence) and imperfections in the quantum hardware itself (e.g., gate errors). These lead to unwanted changes in the qubit states.

How does quantum error correction differ from classical error correction?

Classical error correction deals with bits that are either 0 or 1. Quantum error correction must account for the superposition and entanglement of qubits, making it significantly more complex.

What is the significance of fault-tolerant quantum computation?

Fault-tolerant quantum computation uses error correction to build quantum computers that can perform computations reliably even in the presence of errors. It’s essential for achieving large-scale quantum computation.

Are there any limitations to current quantum error correction methods?

Yes, current methods are resource-intensive, requiring many physical qubits to protect a small number of logical qubits. Improving the efficiency of error correction is a major challenge.

What are some promising future directions in quantum error correction research?

Active research areas include developing new quantum codes, improving error correction techniques for specific architectures, and exploring topological protection methods.