Quantum AI hardware development and its scalability challenges

Quantum AI hardware development and its challenges in scalability are at the forefront of a technological revolution. Building quantum computers powerful enough for practical AI applications presents immense hurdles. From the delicate dance of controlling individual qubits to the daunting task of manufacturing and connecting thousands, the path is fraught with complexity. This exploration delves into the various architectural approaches, the inherent limitations of current technology, and the innovative solutions being developed to overcome these obstacles.

We’ll examine the critical role of error correction, the limitations of qubit connectivity, and the vital need for advanced control systems and cryogenic environments. Ultimately, we’ll explore the future of this rapidly evolving field and the potential for breakthroughs that could reshape the landscape of artificial intelligence.

This journey will cover the fundamental principles of quantum computing hardware relevant to AI, examining different architectures like superconducting, trapped ions, and photonic systems. We will dissect the scalability challenges, focusing on qubit coherence, error rates, and the complexities of manufacturing and controlling large-scale quantum systems. Further, we’ll explore quantum algorithms tailored for AI, their scalability limitations, and strategies for mitigating these challenges.

Finally, we will discuss the crucial aspects of control systems, cryogenics, software development, and the promising research avenues that could pave the way for a future where powerful quantum AI becomes a reality.

Introduction to Quantum AI Hardware

Quantum computing harnesses the bizarre principles of quantum mechanics to perform calculations beyond the capabilities of classical computers. This opens exciting possibilities for artificial intelligence, enabling the development of AI algorithms that can tackle problems currently intractable, such as drug discovery, materials science, and financial modeling. The fundamental difference lies in the way information is processed: classical computers use bits representing 0 or 1, while quantum computers use qubits, which can represent 0, 1, or a superposition of both simultaneously.

This superposition, along with entanglement (the linking of multiple qubits’ fates), allows quantum computers to explore many possibilities concurrently, leading to exponential speedups for certain types of computations.Quantum AI hardware aims to physically realize these quantum phenomena, allowing us to build and program quantum computers capable of running sophisticated AI algorithms. Several architectural approaches are being pursued, each with its own unique strengths and weaknesses.

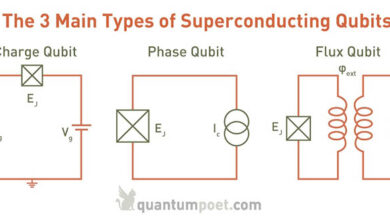

Superconducting Quantum Computing Architectures

Superconducting qubits leverage the quantum properties of superconducting circuits cooled to extremely low temperatures near absolute zero. These circuits are designed to exhibit quantized energy levels, representing the 0 and 1 states of a qubit. Control and measurement are achieved through microwave pulses. Companies like Google, IBM, and Rigetti are at the forefront of this technology. A significant strength of superconducting qubits is their relatively high coherence times (the time a qubit maintains its quantum state), and the possibility of scaling to large numbers of qubits through fabrication techniques similar to those used in classical microchip manufacturing.

However, the need for extremely low temperatures and the complexity of the control electronics present significant challenges to scalability and cost-effectiveness. For example, Google’s Sycamore processor, while demonstrating quantum supremacy in a specific task, requires extensive cryogenic infrastructure.

Trapped Ion Quantum Computing Architectures

In trapped ion systems, individual ions are trapped using electromagnetic fields and their internal energy levels are used to encode qubits. Quantum gates are implemented by precisely controlling laser pulses that interact with the ions. Companies like IonQ and Honeywell are developing this technology. Trapped ion systems boast exceptionally long coherence times and high fidelity gate operations, making them highly accurate.

However, scaling up the number of qubits presents a significant challenge due to the individual addressing and control required for each ion. This approach requires more complex and precise control systems than superconducting architectures, limiting the potential for large-scale integration.

Photonic Quantum Computing Architectures

Photonic quantum computers utilize photons (particles of light) as qubits. Quantum operations are performed using optical components such as beam splitters, phase shifters, and detectors. This approach offers the potential for scalability due to the ease of routing and manipulating photons using optical fibers. Furthermore, photons are naturally less susceptible to noise compared to other qubit types.

However, generating and manipulating entangled photons with high fidelity remains a significant hurdle. While several research groups are actively developing photonic quantum computing, it’s still in its relatively early stages compared to superconducting and trapped ion approaches. The challenge lies in developing efficient and scalable sources of entangled photons and highly accurate optical components for implementing quantum gates.

Scalability Challenges in Quantum AI Hardware

Building truly powerful quantum computers capable of tackling complex AI problems requires scaling up the number of qubits significantly. This presents a formidable challenge, demanding breakthroughs across multiple fronts, from qubit fabrication to error correction strategies. The current limitations significantly restrict the potential of quantum AI, hindering its ability to surpass classical approaches in many practical applications.

Qubit Coherence Times and Error Rates

Maintaining the delicate quantum states of qubits—their coherence—is crucial for performing computations. Unfortunately, qubits are highly susceptible to noise from their environment, leading to decoherence and errors. These errors accumulate during computations, compromising the accuracy of results. Longer coherence times are needed to execute longer and more complex algorithms, essential for advanced AI tasks. For example, a qubit with a coherence time of only 10 microseconds would severely limit the complexity of algorithms it could reliably run, making it unsuitable for many AI applications that require significantly longer computation times.

Current research focuses on improving qubit design and environmental shielding to extend coherence times and reduce error rates.

Manufacturing and Controlling Large Numbers of Qubits

The fabrication of large-scale quantum processors presents significant engineering hurdles. Precisely controlling individual qubits within a large array is extremely challenging, requiring advanced fabrication techniques and sophisticated control electronics. The sheer number of components needed for even moderately sized quantum computers presents a significant logistical and technological challenge. Consider the complexity of controlling thousands or millions of qubits, each requiring precise individual addressing and manipulation.

This complexity extends beyond just the hardware; sophisticated software and control systems are also necessary to manage and coordinate the interactions of these numerous qubits. This presents a formidable scaling challenge for quantum computer manufacturing.

Error Correction in Quantum Computing

Error correction is paramount for building fault-tolerant quantum computers. Unlike classical computing, where errors are relatively easy to detect and correct, quantum error correction is far more complex due to the inherent fragility of quantum states. Several approaches exist, each with its own trade-offs. Surface code, for example, utilizes a large number of physical qubits to encode a single logical qubit, significantly increasing the hardware overhead.

Other methods, like topological quantum computation, promise more robust error correction but face significant technological hurdles in their implementation. The overhead associated with error correction dramatically impacts the scalability of quantum computers, requiring a massive increase in the number of physical qubits to achieve even modest levels of fault tolerance. For instance, achieving a single logical qubit with high fidelity might require hundreds or even thousands of physical qubits, drastically increasing the complexity and cost of building a practical quantum computer.

Qubit Connectivity and Architecture

Qubit connectivity significantly impacts the performance and scalability of quantum algorithms. The way qubits are interconnected directly influences the efficiency of quantum computations, particularly for complex algorithms used in quantum machine learning. Poor connectivity can lead to longer computation times and increased error rates, hindering the scalability of the quantum system.The physical arrangement of qubits and their interconnections define the architecture of a quantum computer.

Different architectures offer varying levels of connectivity and scalability, each with its own strengths and weaknesses. Choosing the right architecture is crucial for achieving optimal performance for specific quantum algorithms.

Impact of Qubit Connectivity on Algorithm Performance, Quantum AI hardware development and its challenges in scalability

The connectivity of qubits determines how easily information can be exchanged between them. Algorithms requiring frequent interactions between distant qubits will perform poorly on architectures with limited connectivity. For instance, algorithms based on quantum annealing or variational quantum eigensolver (VQE) methods often require high connectivity to efficiently explore the energy landscape or prepare entangled states. Limited connectivity necessitates longer sequences of operations to move information between qubits, increasing the overall computation time and the probability of errors.

Conversely, highly connected architectures enable faster and more efficient execution of these algorithms, leading to better scalability. This translates to the ability to handle larger problem sizes and more complex computations.

Optimal Qubit Arrangement for Quantum Machine Learning

Designing an optimal qubit arrangement depends heavily on the specific quantum machine learning algorithm. For algorithms like quantum support vector machines (QSVM) or quantum neural networks (QNN), a highly connected architecture, such as a fully connected graph or a lattice with high coordination number, might be beneficial. This allows for efficient parallel processing of information and faster training.

However, fully connected architectures become increasingly challenging to implement as the number of qubits grows, due to the quadratic increase in the number of connections required. A more practical approach might involve a hybrid architecture, combining highly connected regions with less densely connected areas, to balance performance and scalability. For example, a hierarchical architecture could have tightly connected clusters of qubits, with less dense connections between clusters.

This allows for local computation within clusters and efficient communication between them.

Comparison of Qubit Interconnection Schemes

Different interconnection schemes lead to different trade-offs between connectivity and scalability. Here’s a comparison of some common architectures:

| Architecture | Qubit Count (Example) | Connectivity | Error Rate (Example) |

|---|---|---|---|

| Linear Array | 100 | Nearest-neighbor only | 0.1% |

| 2D Grid | 100 | Nearest-neighbor in 2D | 0.05% |

| Fully Connected | 50 | All-to-all | 0.2% |

| All-to-All | 10 | Every qubit connected to every other | 0.15% |

| Hybrid Architecture (e.g., modular) | 200 | High connectivity within modules, sparse between | 0.08% |

Note: The qubit counts and error rates are illustrative examples and vary significantly depending on the specific technology used. Error rates are often dependent on the qubit count and connectivity as well.

Quantum Algorithms for AI and Scalability

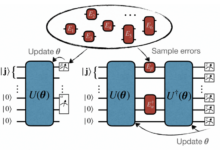

Source: ytimg.com

Quantum computing offers the potential to revolutionize machine learning by tackling problems currently intractable for classical computers. However, realizing this potential requires careful consideration of how quantum algorithms scale with increasing data size and model complexity. The inherent limitations of current quantum hardware necessitate the development of algorithms specifically designed to leverage the strengths of quantum systems while mitigating their weaknesses.Quantum algorithms suitable for machine learning tasks leverage the principles of superposition and entanglement to perform computations in fundamentally different ways than classical algorithms.

This allows for potential speedups in certain types of problems. However, the scalability of these algorithms remains a significant challenge.

Quantum Machine Learning Algorithms

Several quantum algorithms show promise for machine learning applications. These algorithms aim to achieve speedups over classical approaches by exploiting quantum phenomena to efficiently solve specific problems. Examples include Quantum Support Vector Machines (QSVM), Quantum Principal Component Analysis (QPCA), and Variational Quantum Eigensolver (VQE) for training quantum neural networks. These algorithms offer potential advantages in terms of speed and accuracy for specific tasks, but their scalability is often limited by the number of qubits available and the complexity of the quantum circuits required.

Scalability Challenges and Mitigation Strategies

The scalability of quantum machine learning algorithms is hampered by several factors. The number of qubits available in current quantum computers is relatively small, limiting the size of the datasets and models that can be processed. Furthermore, qubit coherence times are short, meaning that quantum computations are susceptible to errors. The complexity of quantum circuits also increases exponentially with the size of the problem, making it challenging to implement and execute large-scale quantum machine learning tasks.One key strategy to mitigate scalability challenges is algorithm design.

Developing algorithms with lower qubit requirements and reduced circuit depth is crucial. Techniques such as quantum data encoding, hybrid quantum-classical approaches, and error mitigation techniques are actively being researched to improve the scalability of quantum machine learning. For instance, researchers are exploring ways to encode classical data into a smaller number of qubits, thereby reducing the hardware requirements.

Hybrid approaches combine classical and quantum computations, leveraging the strengths of both to handle larger datasets and more complex models. Error mitigation techniques aim to reduce the impact of noise and errors on the computation results.

Examples of Scalability Mitigation

Consider the problem of training a quantum neural network using VQE. A naive implementation might require a number of qubits that grows exponentially with the size of the network. However, by carefully designing the network architecture and employing techniques like tensor network contractions, the qubit requirements can be significantly reduced. Similarly, hybrid quantum-classical approaches can be used to train larger networks by performing part of the training on a classical computer and only using the quantum computer for specific computationally intensive tasks.

This hybrid approach allows for handling larger datasets and more complex models than would be possible with a purely quantum approach. The choice of encoding scheme for the data is also crucial; more efficient encodings lead to smaller quantum circuits and improved scalability.

Control Systems and Cryogenics: Quantum AI Hardware Development And Its Challenges In Scalability

Building and maintaining a quantum computer requires incredibly precise control over individual qubits, a challenge that grows exponentially with the number of qubits. Simultaneously, these delicate quantum states need to be shielded from environmental noise, necessitating extremely low temperatures. The interplay between sophisticated control systems and cryogenic environments is crucial for the development of scalable quantum AI hardware.The challenge of controlling and stabilizing a large number of qubits stems from the need for highly accurate and synchronized microwave pulses to manipulate their quantum states.

Each qubit requires its own individual control line, leading to a complex wiring scheme and the need for high-fidelity signal generation and distribution. Crosstalk, where signals intended for one qubit unintentionally affect others, becomes a significant issue as the number of qubits increases, leading to errors in computation. Furthermore, the control electronics themselves generate heat, which can disrupt the delicate quantum states, creating another layer of complexity in the design and operation of a quantum computer.

Qubit Control Techniques

Precise control over individual qubits is achieved through various techniques, often involving microwave pulses generated by sophisticated electronics. These pulses need to be carefully calibrated to ensure the desired quantum operations are performed with high fidelity. Advanced control algorithms, such as optimal control and feedback control, are used to mitigate errors and improve the accuracy of qubit manipulation.

For instance, dynamic decoupling techniques apply carefully timed pulses to protect qubits from environmental noise, extending their coherence times. Advanced signal processing techniques are employed to minimize crosstalk and improve the overall fidelity of qubit control.

Cryogenic Cooling and its Impact

Maintaining the quantum states of qubits requires extremely low temperatures, typically in the millikelvin range. This is because thermal fluctuations at higher temperatures can disrupt the delicate superposition and entanglement of qubits, leading to decoherence and errors. Cryogenic systems, using liquid helium or dilution refrigerators, are employed to cool the qubits to these ultra-low temperatures. The design and operation of these cryogenic systems are complex and require careful consideration of thermal management, vibration isolation, and other factors that can affect the stability of the qubits.

The size and complexity of these cryogenic systems also pose a significant challenge to scaling up quantum computers.

Innovative Approaches to Control System Scalability

Several innovative approaches are being explored to improve the efficiency and scalability of control systems. One promising approach is the development of integrated circuits that incorporate both the control electronics and the qubits on a single chip. This reduces the wiring complexity and improves the signal fidelity. Another approach involves using superconducting resonators to distribute control signals to multiple qubits simultaneously, reducing the number of individual control lines needed.

Furthermore, advancements in digital-to-analog converters (DACs) and microwave signal generators are leading to more efficient and higher-fidelity control systems. Research into new materials and fabrication techniques also plays a crucial role in developing more robust and scalable control systems. For example, the use of superconducting materials allows for the creation of high-quality microwave components that operate efficiently at cryogenic temperatures.

Software and Programming for Scalable Quantum AI

Developing software and programming tools capable of harnessing the power of large-scale quantum computers for AI applications presents a significant hurdle. Current quantum computers are still in their nascent stages, and the software ecosystem is evolving rapidly to meet the demands of increasingly complex quantum algorithms and hardware architectures. This necessitates the development of new programming paradigms and tools that address the unique challenges of quantum computation, such as qubit coherence times, error rates, and the need for efficient management of quantum resources.The current landscape of quantum programming languages and frameworks is a mix of established approaches and emerging innovations.

Several languages aim to provide higher-level abstractions, shielding the programmer from the low-level details of quantum hardware control, while others offer more direct control over the quantum operations. The choice of programming paradigm significantly influences the scalability and efficiency of quantum AI algorithms.

Quantum Programming Paradigms and Their Suitability for Large-Scale AI

Different quantum programming paradigms offer varying levels of abstraction and control, impacting their suitability for large-scale AI tasks. Circuit-based models, dominant in current quantum computing, represent computations as sequences of quantum gates acting on qubits. This approach is relatively well-understood but can become cumbersome for complex algorithms. Measurement-based quantum computing offers a different approach, using a pre-prepared entangled state and measurements to perform computations.

This paradigm can potentially offer advantages in scalability but requires specialized hardware and software. Adiabatic quantum computing, utilized by D-Wave systems, uses a different approach based on slowly evolving the Hamiltonian of a quantum system to find the ground state, which can be useful for specific optimization problems. Each paradigm has its strengths and weaknesses regarding scalability and applicability to AI.

For example, circuit-based models are currently better supported by existing quantum hardware and software tools, while measurement-based approaches might offer better scalability for certain types of algorithms in the future. Adiabatic quantum computing is currently limited in its ability to tackle the broad range of AI problems that are suitable for gate-based quantum computers.

Key Features of an Ideal Quantum Programming Environment for Scalable AI

An ideal quantum programming environment for scalable AI would need to address several crucial aspects. The following list highlights key features necessary for efficient development and deployment of large-scale quantum AI algorithms:

- High-level abstraction: The environment should allow programmers to express algorithms in a high-level language, abstracting away low-level hardware details. This allows for easier algorithm design and modification, and increased portability across different quantum hardware platforms.

- Automatic optimization: The environment should include tools for automatically optimizing quantum circuits for execution on specific hardware, minimizing the number of gates and reducing the impact of noise.

- Error mitigation techniques: Incorporating robust error mitigation strategies is crucial for handling the inherent noise in quantum computers. The environment should provide tools and techniques to reduce the impact of errors on computation results.

- Hardware-aware compilation: The compiler should be aware of the specific hardware architecture, allowing for efficient mapping of quantum algorithms onto the physical qubits and minimizing communication overhead.

- Scalable resource management: The environment should provide tools for managing the allocation and scheduling of quantum resources efficiently, especially important for large-scale computations.

- Hybrid classical-quantum computation support: Most quantum AI algorithms will require a combination of classical and quantum computation. The environment should seamlessly integrate classical and quantum computing capabilities.

- Debugging and profiling tools: Advanced debugging and profiling tools are essential for identifying and resolving errors in quantum algorithms and optimizing their performance.

- Support for diverse quantum algorithms: The environment should support a wide range of quantum algorithms, including those specifically designed for AI tasks such as quantum machine learning algorithms.

Future Directions and Potential Breakthroughs

The scalability challenges facing quantum AI hardware are significant, but ongoing research suggests several promising avenues for overcoming these limitations. Addressing these challenges requires a multi-pronged approach, encompassing advancements in qubit technology, architectural design, and control systems. Significant breakthroughs in any of these areas could dramatically accelerate the development of practical, large-scale quantum computers capable of tackling complex AI problems.Progress in several key areas holds the potential to unlock the full power of quantum AI.

These areas are interconnected, and advancements in one often drive progress in others. For example, improvements in qubit coherence times directly impact the complexity of algorithms that can be implemented.

Promising Research Areas Addressing Scalability

Several research directions hold considerable promise for improving the scalability of quantum AI hardware. These include exploring new qubit modalities with inherently higher coherence times and improved controllability, developing novel qubit architectures that simplify connectivity and reduce crosstalk, and creating more robust and efficient error correction codes. Furthermore, significant advances in cryogenic engineering and control systems are crucial for managing the complex environment required for stable quantum computation.

Potential Technological Advancements Leading to Breakthroughs

One promising area is the development of topological qubits. These qubits, based on non-local properties of matter, are theoretically more robust to environmental noise and decoherence, potentially leading to longer coherence times and simplified error correction. Another area is the exploration of novel materials and fabrication techniques to create higher-quality qubits with improved performance. For example, research into silicon-based qubits is showing considerable progress, offering a potential path towards scalable manufacturing using existing semiconductor technology.

Finally, advancements in quantum error correction codes, moving beyond simple repetition codes to more sophisticated approaches, are crucial for building fault-tolerant quantum computers.

Hypothetical Future Quantum Computer Architecture

Imagine a future quantum computer architecture based on a modular, scalable design using topological qubits. This architecture, which we’ll call the “Modular Topological Quantum Array” (MTQA), employs a 2D array of topological qubits arranged in a hexagonal lattice. Each hexagon represents a module containing a small number (e.g., 16) of qubits with local control and measurement capabilities. These modules are interconnected via optical fibers, enabling long-range qubit entanglement and communication between modules.

The hexagonal lattice structure provides efficient connectivity while minimizing crosstalk. Error correction is implemented using a distributed, surface code approach across multiple modules, leveraging the inherent robustness of topological qubits. The entire system is housed in a highly advanced cryogenic system, providing exceptional thermal isolation and stability.This MTQA architecture would be scalable by simply adding more hexagonal modules to the array.

The modular design simplifies fabrication, testing, and maintenance, allowing for the construction of progressively larger quantum computers. The use of optical interconnects minimizes the need for complex, error-prone wiring within the cryostat, while the topological qubits provide inherent protection against noise. This combination of modularity, topological qubits, and optical interconnects represents a potential path towards building truly large-scale, fault-tolerant quantum computers capable of solving currently intractable AI problems.

The hexagonal arrangement allows for efficient nearest-neighbor interactions and facilitates the implementation of surface codes for error correction. The optical interconnects enable long-range entanglement, essential for scalable quantum algorithms. The modular design simplifies manufacturing and maintenance, making it more practical for large-scale deployment.

Last Recap

The development of scalable quantum AI hardware is a monumental undertaking, pushing the boundaries of physics and engineering. While significant challenges remain, the progress made in understanding and mitigating these hurdles is remarkable. From advancements in qubit control and error correction to the development of sophisticated quantum programming languages, the field is constantly evolving. The potential rewards – revolutionary advances in artificial intelligence, drug discovery, materials science, and more – are immense, fueling continued investment and innovation.

The future of quantum AI is bright, promising a transformative impact on numerous aspects of our lives.

Questions and Answers

What are the main differences between superconducting and trapped ion quantum computers?

Superconducting qubits leverage the quantum properties of superconducting circuits, while trapped ion qubits use individual ions trapped in electromagnetic fields. Superconducting systems generally offer higher qubit counts but suffer from shorter coherence times, while trapped ion systems boast longer coherence times but are currently limited in scalability.

How does error correction work in quantum computing, and why is it so crucial?

Quantum error correction involves encoding quantum information across multiple qubits to protect it from noise and errors. This is vital because even small errors can quickly accumulate in large-scale quantum computations, rendering the results unreliable.

What role does cryogenics play in quantum computing?

Cryogenics is essential for maintaining the extremely low temperatures required to preserve the delicate quantum states of qubits. These low temperatures minimize thermal noise and allow for stable quantum computations.

What are some examples of quantum algorithms used in machine learning?

Quantum algorithms like Quantum Approximate Optimization Algorithm (QAOA) and Variational Quantum Eigensolver (VQE) are being explored for machine learning tasks such as optimization and classification.

What are the biggest obstacles to building a fault-tolerant quantum computer?

Building a fault-tolerant quantum computer requires overcoming challenges related to qubit coherence, error rates, scalability, and the development of efficient error correction codes. The sheer complexity of managing and controlling a large number of qubits is a major hurdle.