Low-latency communication A Deep Dive

Low-latency communication is revolutionizing how we interact with the digital world. Imagine a world where online gaming is completely lag-free, financial transactions happen instantaneously, and remote surgery is as precise as if the surgeon were in the same room. This is the promise of low-latency communication, a field focused on minimizing delays in data transmission. This exploration delves into the technologies, challenges, and applications shaping this rapidly evolving landscape.

From the underlying technologies like fiber optics and 5G to the critical considerations of network congestion and jitter, we’ll examine the key factors influencing latency. We’ll also explore how different industries, from finance to healthcare, are leveraging low-latency communication to improve efficiency, accuracy, and user experience. The journey will also touch upon future trends, such as quantum communication, and their potential to further minimize latency and transform how we connect.

Defining Low-Latency Communication

Low-latency communication refers to the transmission of data with minimal delay. This is crucial in many applications where speed and responsiveness are paramount. The shorter the delay between sending and receiving information, the more efficient and effective the communication becomes. The significance lies in enabling real-time interactions and decision-making, ultimately improving overall system performance and user experience.Low-latency communication is characterized by a short round-trip time (RTT), the time it takes for a signal to travel from a sender to a receiver and back.

Minimizing this RTT is the primary goal. The acceptable latency threshold varies greatly depending on the specific application; a few milliseconds can be critical in some scenarios, while hundreds of milliseconds might be tolerable in others.

Industries Requiring Low-Latency Communication

Several industries heavily rely on low-latency communication for their operations. The financial sector, for example, uses it for high-frequency trading where milliseconds can mean the difference between profit and loss. Similarly, online gaming demands extremely low latency to provide a smooth and responsive player experience, preventing lag and ensuring fair gameplay. Autonomous vehicles rely on near-instantaneous communication between sensors, control systems, and other vehicles to navigate safely and efficiently.

Remote surgery and telemedicine also necessitate low-latency connections to allow surgeons to control robotic instruments with precision and minimal delay. Finally, industrial automation systems utilize low-latency communication for real-time control and monitoring of machinery and processes.

Key Performance Indicators (KPIs) for Low-Latency Communication

Several KPIs are used to measure the effectiveness of low-latency communication systems. These include:

- Round-Trip Time (RTT): The total time it takes for a signal to travel from sender to receiver and back.

- Jitter: The variation in delay between successive packets of data.

- Packet Loss: The percentage of data packets that fail to reach their destination.

- Bandwidth: The amount of data that can be transmitted per unit of time.

These metrics are essential for identifying bottlenecks and optimizing communication systems to achieve the desired low-latency performance. High jitter, for example, can lead to disruptions and data corruption, while high packet loss can render the communication unreliable.

Comparison of Communication Protocols

Different communication protocols exhibit varying latency characteristics. The choice of protocol depends heavily on the specific application requirements.

| Protocol | Typical Latency (ms) | Bandwidth | Reliability |

|---|---|---|---|

| TCP | 10-100 | Variable | High |

| UDP | 1-10 | Variable | Low |

| WebRTC | <10 | Variable | Medium |

| QUIC | <10 | Variable | High |

| Note: Latency values are approximate and can vary based on network conditions and implementation. | |||

Technologies Enabling Low-Latency Communication

Achieving truly low-latency communication requires a synergistic approach, combining advanced networking technologies, optimized hardware, and efficient data handling techniques. This section explores the key technological components that contribute to minimizing delays in data transmission.Low latency is achieved through a combination of factors, focusing on minimizing the time it takes for data to travel from source to destination. This involves optimizing every stage of the communication process, from the physical transmission medium to the processing power of the devices involved.

Fiber Optics

Fiber optic cables offer significantly faster data transmission speeds compared to traditional copper cables. This is due to the use of light signals, which travel at a much higher speed and experience less signal degradation over long distances. The inherent bandwidth of fiber optics allows for a much larger volume of data to be transmitted simultaneously, further reducing latency caused by congestion.

The absence of electromagnetic interference also contributes to its superior performance in low-latency applications.

5G Cellular Networks, Low-latency communication

Fifth-generation (5G) cellular networks represent a significant advancement in mobile communication, offering significantly lower latency compared to previous generations. Key improvements include higher frequencies (millimeter wave), advanced antenna technologies (massive MIMO), and network slicing, which allows for the creation of dedicated, low-latency network paths. This makes 5G suitable for real-time applications like remote surgery, autonomous driving, and augmented reality experiences that demand immediate responsiveness.

The improvements in spectral efficiency and reduced interference also contribute to overall lower latency.

Specialized Hardware

Specialized hardware plays a critical role in reducing latency. This includes high-performance network interface cards (NICs) with advanced features like RDMA (Remote Direct Memory Access), which bypasses the operating system’s kernel for faster data transfer. Field-Programmable Gate Arrays (FPGAs) offer configurable hardware that can be optimized for specific low-latency tasks, allowing for custom processing pipelines that minimize delays.

Similarly, high-performance computing (HPC) clusters often utilize specialized interconnects like InfiniBand, designed for extremely low latency communication between nodes.

Network Topologies and Latency

The choice of network topology significantly influences latency. Star topologies, where all devices connect to a central hub, generally offer lower latency for point-to-point communication compared to bus or ring topologies, as the data only needs to travel a shorter distance. However, the central hub can become a bottleneck if not properly designed and scaled. Mesh topologies offer redundancy and increased bandwidth but can introduce higher latency due to the increased number of hops data may need to travel.

The optimal topology depends on the specific requirements of the application and the size and scale of the network.

Low-latency communication is crucial for many applications, especially those involving real-time data analysis. This is particularly true in fields like materials science, where advancements are being driven by powerful computational tools. For example, check out this article on Quantum AI’s contribution to materials science and engineering breakthroughs to see how fast processing speeds are needed.

Ultimately, faster communication directly translates to faster progress in these computationally intensive areas.

Compression Techniques and Latency

Data compression techniques play a crucial role in reducing latency, particularly in bandwidth-constrained environments. By reducing the size of the data packets, compression decreases transmission time, directly impacting latency. Algorithms like LZ77, DEFLATE, and Zstandard are commonly used, offering different levels of compression ratio and computational overhead. The choice of compression algorithm involves a trade-off between compression ratio and the processing time required for compression and decompression, which can affect overall latency.

Hypothetical Low-Latency Network Architecture

A hypothetical network architecture optimized for low-latency communication might consist of a fiber optic backbone connecting regional data centers. These data centers would utilize high-performance switches and routers with low latency features. Within each data center, servers would be interconnected via InfiniBand, enabling extremely fast communication between processing nodes. 5G cellular networks would provide low-latency access for mobile clients, with network slicing ensuring prioritized bandwidth and minimal interference.

The entire network would be managed by a sophisticated network monitoring and control system, capable of dynamically adjusting routing and bandwidth allocation to minimize latency based on real-time network conditions. This system would also incorporate advanced Quality of Service (QoS) mechanisms to prioritize low-latency traffic.

Challenges in Achieving Low-Latency Communication

Achieving truly low-latency communication is a significant hurdle in many applications, from online gaming to real-time financial trading. Numerous factors conspire to introduce delays and inconsistencies, impacting the quality and reliability of the communication. Understanding these challenges is crucial for designing effective and efficient low-latency systems.

Network Congestion and Jitter

Network congestion, where the volume of data transmitted exceeds the network’s capacity, is a primary source of latency. This leads to increased queuing delays as packets wait for transmission. Jitter, the variation in packet arrival times, further degrades performance, causing uneven playback or disruptions in real-time applications. For instance, imagine a video conference: congestion leads to noticeable delays in hearing others, while jitter causes audio to sound choppy and fragmented.

These problems are particularly acute during peak usage times or on overloaded networks.

Mitigating Network Jitter and Packet Loss

Several techniques can mitigate the effects of jitter and packet loss. Quality of Service (QoS) mechanisms prioritize low-latency traffic, ensuring it receives preferential treatment over less critical data. Techniques like buffering can smooth out jitter by temporarily storing incoming packets and releasing them at a more consistent rate. Forward Error Correction (FEC) adds redundancy to transmitted data, allowing the receiver to reconstruct lost or corrupted packets.

For example, in online gaming, QoS prioritization ensures that game commands reach the server quickly, minimizing lag. Buffering helps smooth out temporary network hiccups, preventing noticeable stutters in the gameplay.

Bandwidth and Latency Trade-offs

There’s an inherent trade-off between bandwidth and latency in network design. Increasing bandwidth generally reduces latency by allowing more data to be transmitted simultaneously. However, higher bandwidth often requires more complex and potentially slower infrastructure. Consider a fiber optic network versus a traditional copper-based network: fiber offers significantly higher bandwidth and lower latency, but its implementation is more expensive and complex.

The optimal balance depends on the specific application’s requirements. A high-bandwidth, high-latency connection might be suitable for large file transfers, while a low-bandwidth, low-latency connection is critical for real-time applications.

Solutions for Addressing Latency Issues in Real-Time Applications

Addressing latency issues requires a multifaceted approach. Optimizing network topology can reduce the physical distance data travels, minimizing propagation delays. Employing efficient network protocols, such as UDP instead of TCP in certain situations, can reduce overhead and improve speed. Content Delivery Networks (CDNs) distribute content closer to users, reducing the distance data must travel. Furthermore, utilizing specialized hardware, like low-latency switches and routers, significantly enhances performance.

Finally, employing techniques like predictive algorithms can anticipate and compensate for latency variations, improving the user experience. For instance, in autonomous driving, predictive algorithms might anticipate delays and adjust vehicle control accordingly.

Applications of Low-Latency Communication

Low-latency communication, characterized by its minimal delay, is revolutionizing various sectors by enabling real-time interactions and enhancing efficiency. Its impact spans across numerous industries, significantly improving user experience and opening up new possibilities for innovation. This section explores some key application areas and the benefits derived from minimizing communication delays.Low-latency communication offers a competitive edge in several fields.

The reduced delay translates directly into improved responsiveness, increased accuracy, and ultimately, a better user experience. This is especially crucial in applications where even fractional seconds can make a significant difference.

Low-latency communication is crucial for many applications, especially those demanding real-time responses. This need is amplified when considering complex problems like route optimization in logistics, where speedy calculations are key. For instance, check out this article on Quantum AI’s potential in solving optimization problems in logistics to see how advancements are being made. Ultimately, faster processing speeds, enabled by low-latency communication, are vital for realizing the full potential of these quantum solutions.

Online Gaming

The online gaming industry heavily relies on low-latency communication. In multiplayer games, high latency leads to noticeable lag, impacting gameplay and player experience. For example, a player might see an opponent’s actions delayed, making it difficult to react effectively. Low latency, however, ensures that actions are reflected almost instantaneously, creating a smooth and responsive gaming environment. This improves competitiveness, enhances immersion, and contributes to a more enjoyable gaming experience.

Games like competitive first-person shooters (FPS) and real-time strategy (RTS) games are particularly sensitive to latency. A delay of even a few milliseconds can be the difference between victory and defeat.

Low-latency communication is crucial for many applications, especially those needing real-time data transfer. This is particularly true in the rapidly evolving field of personalized medicine, where analyzing complex patient data quickly is vital. For instance, check out this article on The role of quantum AI in personalized medicine and healthcare to see how this impacts the need for speed.

Ultimately, advancements in low-latency communication will directly influence the effectiveness of AI-driven healthcare solutions.

Financial Trading

In high-frequency trading, milliseconds matter. Low-latency communication networks enable traders to execute trades almost instantaneously, capitalizing on fleeting market opportunities. High latency can lead to missed opportunities and significant financial losses. The speed of communication is crucial for algorithmic trading systems, where complex algorithms analyze market data and execute trades automatically. Low latency ensures these systems can react quickly to changing market conditions, maximizing profits and minimizing risks.

For example, a delay of even a few microseconds can mean the difference between buying or selling at a slightly more favorable price, leading to significant gains or losses over time.

Remote Surgery

Robotic surgery relies heavily on low-latency communication to enable surgeons to control robotic arms remotely. High latency introduces delays in the surgeon’s control signals, leading to imprecise movements and potentially dangerous outcomes. Low latency ensures the surgeon’s movements are translated to the robotic arms almost instantaneously, providing a level of precision and control comparable to traditional open surgery.

This technology allows for minimally invasive procedures, faster recovery times, and expanded access to specialized surgical expertise, even in remote locations. The implications for patient safety and surgical outcomes are profound.

Latency Requirements for Various Applications

The acceptable latency varies greatly depending on the application. Even small increases in latency can have significant consequences.

- Online Gaming (Competitive): Less than 20 milliseconds (ms) is generally considered ideal, with anything above 100ms leading to noticeable lag.

- High-Frequency Trading: Latency needs to be measured in microseconds (µs), with single-digit microseconds being the target in many cases.

- Video Conferencing (High Quality): Under 100ms is desirable for a seamless experience, while anything above 200ms can be disruptive.

- Remote Surgery: Latency should be minimized to less than 10ms to ensure precise control and prevent complications.

- Autonomous Driving: Latency requirements are extremely stringent, often in the sub-millisecond range, for safety-critical functions.

Future Trends in Low-Latency Communication

The pursuit of ever-faster communication is driving innovation across multiple technological domains. Emerging technologies promise to drastically reduce latency, leading to a future where near-instantaneous communication becomes the norm, impacting various sectors profoundly. This section explores these advancements and their potential societal consequences.The quest for lower latency is pushing the boundaries of what’s technologically possible. Advancements in both hardware and software are converging to create a future where the speed of communication ceases to be a limiting factor in many applications.

Low-latency communication is crucial for many applications, especially those involving real-time data processing. Understanding the speed differences between different computational approaches is key; for example, check out this insightful comparison of classical and quantum AI performance in specific tasks: Comparing classical AI and quantum AI performance in specific tasks. This understanding helps us optimize communication systems for maximum efficiency, especially when dealing with the demands of AI processing.

This will unlock new possibilities and reshape existing industries in significant ways.

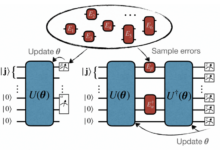

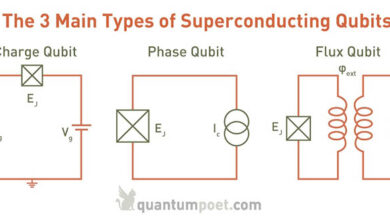

Quantum Communication’s Potential

Quantum communication leverages the principles of quantum mechanics to transmit information with unprecedented security and speed. Quantum entanglement, for instance, allows for the instantaneous correlation of two entangled particles, regardless of the distance separating them. While still in its nascent stages, quantum communication holds the potential to revolutionize secure communication networks, especially those requiring extremely low latency and high security, such as financial transactions or military communications.

Imagine a global financial system where transactions are verified and processed virtually instantaneously with absolute certainty against tampering, all thanks to the unique properties of quantum entanglement. The development of quantum repeaters, which extend the range of quantum communication, is a crucial step towards realizing a globally interconnected quantum network.

Network Virtualization and its Impact

Network virtualization allows for the creation of flexible and dynamic network environments. By decoupling the network’s physical infrastructure from its logical representation, virtualization enables efficient resource allocation and optimized routing, leading to reduced latency. Software-Defined Networking (SDN) and Network Function Virtualization (NFV) are key components of this trend. SDN allows for centralized control and management of the network, enabling intelligent routing decisions that minimize delays.

NFV replaces traditional hardware network functions with virtualized software counterparts, reducing latency and increasing scalability. The implementation of these technologies in 5G and beyond networks is already demonstrating significant improvements in latency, paving the way for real-time applications like autonomous vehicles and remote surgery.

Evolution of Low-Latency Communication Standards and Protocols

The demand for low-latency communication is driving the development and refinement of new standards and protocols. The evolution of protocols like TCP/IP to support real-time applications, coupled with the development of new protocols specifically designed for low-latency environments, is crucial. This includes advancements in data compression techniques and error correction codes that minimize overhead and ensure reliable communication with minimal delay.

Furthermore, the development of new hardware interfaces, like those incorporating faster data transfer rates, will play a crucial role in achieving even lower latency. For example, the transition from Ethernet to faster standards like 400 Gigabit Ethernet and beyond directly impacts the speed and efficiency of data transmission, thereby reducing latency.

A Future with Near-Instantaneous Communication

Imagine a world where communication is truly instantaneous. A surgeon in London performs a complex operation on a patient in Tokyo, guided in real-time by a holographic projection of the patient’s internal organs. Autonomous vehicles seamlessly navigate crowded city streets, communicating instantaneously with each other and with traffic management systems to avoid collisions and optimize traffic flow. Global financial markets operate with zero latency, eliminating the possibility of arbitrage and ensuring fair and efficient pricing.

This scenario represents a future where the limitations of communication speed are largely overcome, leading to increased efficiency, improved safety, and enhanced global collaboration. However, such a future also presents significant societal challenges, including the potential for increased surveillance, the spread of misinformation at an unprecedented speed, and the need for robust cybersecurity measures to protect against malicious attacks targeting these high-speed, interconnected systems.

The equitable access to these technologies and the mitigation of potential negative societal impacts will be critical considerations in shaping this future.

Conclusion

Source: amazingspeechtherapy.my

Low-latency communication isn’t just about speed; it’s about enabling entirely new possibilities. As we’ve seen, the quest for near-instantaneous communication is driving innovation across multiple technologies and industries. While challenges remain in managing network congestion and ensuring consistent performance, the potential benefits are immense. The future promises even faster, more reliable, and more pervasive low-latency communication, fundamentally altering how we live, work, and interact with the world around us.

The implications are far-reaching, impacting everything from global finance to the very fabric of our social connections.

Detailed FAQs

What is jitter in the context of low-latency communication?

Jitter refers to variations in the delay of data packets arriving at their destination. Inconsistent delays disrupt the smooth flow of data, causing issues like choppy audio or video.

How does compression affect latency?

Compression reduces the size of data packets, leading to faster transmission and lower latency. However, the compression and decompression processes themselves introduce a small amount of overhead.

What are some examples of applications beyond those mentioned in the Artikel?

Autonomous driving, industrial automation, and virtual reality are all highly reliant on low-latency communication for real-time responsiveness.

What is the difference between latency and bandwidth?

Latency is the delay in data transmission, while bandwidth is the amount of data that can be transmitted per unit of time. High bandwidth doesn’t necessarily mean low latency, and vice versa.