Quantum AI in Autonomous Weapons Ethical Implications

The ethical implications of using quantum AI in autonomous weapons systems present a complex and rapidly evolving challenge. The potential for incredibly fast, precise, and potentially unbiased decision-making in warfare, enabled by quantum computing’s power, raises profound questions about accountability, responsibility, and the very nature of conflict. This exploration delves into the multifaceted ethical dilemmas inherent in this technological leap, examining the potential for both unprecedented advancements and catastrophic consequences.

From the challenges of assigning responsibility for actions taken by these sophisticated weapons to the risk of unintended escalation and the potential for algorithmic bias to exacerbate existing inequalities, the integration of quantum AI into autonomous weapons systems demands careful consideration. This discussion will analyze the current state of the technology, explore existing and potential legal frameworks, and propose strategies for mitigating the inherent risks.

Ultimately, it seeks to foster a broader conversation about the ethical responsibilities associated with developing and deploying such powerful technology.

Defining Quantum AI in Autonomous Weapons Systems

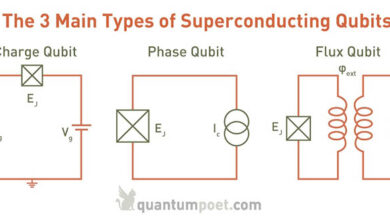

Quantum AI, in the context of autonomous weapons systems, represents a potential paradigm shift in military technology. While still largely theoretical, the integration of quantum computing principles into AI algorithms for autonomous weapons promises significant advancements in processing power and decision-making capabilities. Currently, quantum computing is in its nascent stages, but its potential impact on autonomous weapons is a topic demanding careful consideration.Quantum computing’s power stems from its ability to exploit quantum mechanical phenomena like superposition and entanglement.

Unlike classical bits representing 0 or 1, quantum bits (qubits) can exist in a superposition of both states simultaneously. This allows quantum computers to explore vastly larger solution spaces compared to classical computers, potentially leading to breakthroughs in areas relevant to autonomous weapons.

Quantum Computing’s Enhancement of Autonomous Weapons Capabilities

Quantum computing could dramatically enhance the capabilities of autonomous weapons systems over their classical counterparts. The most significant advantage lies in the speed and efficiency with which complex calculations can be performed. This translates to faster target identification, improved trajectory prediction, and more effective real-time decision-making in dynamic combat environments. For instance, a quantum computer could analyze vast amounts of sensor data – radar, lidar, satellite imagery – far more rapidly than a classical computer, allowing for quicker and more accurate threat assessments.

Specific Functionalities Improved by Quantum AI

Several specific functionalities within autonomous weapons systems stand to benefit from quantum AI integration. These include:

- Target Recognition and Identification: Quantum machine learning algorithms could significantly improve the accuracy and speed of target recognition, even in challenging conditions like low visibility or camouflage. They could analyze complex patterns and features far more efficiently than classical algorithms, reducing the risk of misidentification and collateral damage.

- Trajectory Prediction and Optimization: Quantum algorithms can more accurately predict the trajectory of moving targets, accounting for factors like wind, terrain, and evasive maneuvers. This improved prediction leads to more precise targeting and a higher probability of successful engagements.

- Real-time Decision-Making: The increased processing power of quantum computers allows for faster and more sophisticated decision-making processes in autonomous weapons. This is crucial in dynamic combat scenarios where rapid responses are essential.

- Cybersecurity Enhancement: Quantum cryptography offers the potential to significantly enhance the security of communication and control systems within autonomous weapons, making them more resilient to cyberattacks.

Examples of Relevant Quantum Algorithms

Several quantum algorithms are particularly relevant to the development of quantum AI for autonomous weapons. These include:

- Quantum Machine Learning (QML) algorithms: These algorithms could be used to train AI models for target recognition, threat assessment, and decision-making, potentially surpassing the capabilities of classical machine learning methods.

- Quantum Search Algorithms (e.g., Grover’s algorithm): These algorithms can significantly speed up the search for relevant information within large datasets, improving the efficiency of data analysis and decision-making processes.

- Quantum Optimization Algorithms (e.g., Quantum Approximate Optimization Algorithm – QAOA): These algorithms can be applied to optimize various aspects of autonomous weapons systems, such as trajectory planning, resource allocation, and mission execution.

Accountability and Responsibility: The Ethical Implications Of Using Quantum AI In Autonomous Weapons Systems

The deployment of autonomous weapons systems (AWS) powered by quantum AI presents unprecedented challenges to established legal and ethical frameworks. The immense speed and complexity of quantum AI algorithms, coupled with the inherent autonomy of these weapons, create a significant accountability gap. Determining who bears responsibility when such a system malfunctions or acts contrary to its intended purpose becomes exceedingly difficult, raising profound ethical concerns about potential misuse and unintended consequences.The challenges in assigning responsibility stem from the distributed nature of the development and deployment process.

Multiple actors – developers, manufacturers, deployers, and users – contribute to the creation and operation of these weapons, each potentially playing a role in any harmful outcome. The intricate interplay of their actions, coupled with the opacity of quantum AI decision-making, makes it incredibly hard to pinpoint fault and assign liability. This lack of clear accountability creates a moral hazard, potentially encouraging the development and deployment of these weapons without adequate consideration of the risks.

Challenges in Assigning Responsibility for Actions

Pinpointing responsibility for actions taken by quantum AI-powered AWS is exceptionally complex. Unlike traditional weapons, where a human operator is directly involved in the decision-making process, the actions of a quantum AI-powered AWS are determined by its internal algorithms, making it difficult to trace the causal chain leading to any harmful outcome. The black-box nature of advanced AI algorithms, especially those utilizing quantum computing, further compounds this issue.

Even the developers may struggle to fully explain the rationale behind a specific decision made by the system. This opacity makes it difficult to determine whether a malfunction was due to a design flaw, a software error, or an unforeseen consequence of the AI’s learning process. For instance, if a quantum AI-powered drone misidentifies a civilian target as a military target, determining whether the error was due to flawed training data, a bug in the algorithm, or an unexpected interaction between the quantum algorithm and its environment becomes nearly impossible, making assigning responsibility difficult.

Legal and Ethical Frameworks for Accountability

Addressing the accountability gap requires a comprehensive overhaul of existing legal and ethical frameworks. Current international humanitarian law, designed for human-operated weapons, is inadequate to deal with the complexities of autonomous weapons. New regulations must be developed to specifically address the unique challenges posed by quantum AI-powered AWS. This includes establishing clear lines of responsibility for all actors involved in the lifecycle of these weapons, from design and manufacture to deployment and use.

Furthermore, mechanisms for oversight, transparency, and redress for victims of harm caused by these weapons need to be implemented. A potential approach might involve creating an international regulatory body specifically tasked with overseeing the development, testing, and deployment of quantum AI-powered AWS, establishing stringent safety and ethical guidelines, and investigating incidents involving harm. This body could also establish a robust system for compensating victims of harm caused by these weapons.

Responsibility of Developers, Manufacturers, Deployers, and Users

The responsibility for the actions of quantum AI-powered AWS is not solely borne by one actor but is distributed across the entire lifecycle. Developers bear responsibility for designing safe and ethically sound systems, incorporating mechanisms for human oversight and error detection. Manufacturers are responsible for ensuring the quality and reliability of the hardware and software. Deployers must ensure that the weapons are used in accordance with international law and ethical guidelines, while users have a responsibility to adhere to the established rules of engagement and to report any malfunctions or incidents promptly.

However, assigning proportionate liability amongst these actors presents a significant legal challenge. For example, a developer might argue that a malfunction was due to misuse by the user, while the manufacturer might claim that the problem stemmed from inadequate testing by the developer. Resolving such disputes requires a clear legal framework that defines the responsibilities of each actor and establishes mechanisms for determining liability.

A Hypothetical Legal Framework

A hypothetical legal framework for addressing the ethical dilemmas could incorporate a multi-layered approach. First, a strict licensing system for the development, manufacture, and deployment of quantum AI-powered AWS should be established, requiring rigorous safety testing and ethical reviews. Second, a clear chain of command and accountability should be defined, ensuring that a designated entity is responsible for the actions of the weapon at all times.

This could involve a system of human-in-the-loop oversight, where a human operator retains ultimate control over the weapon’s actions. Third, a robust system for investigating incidents and assigning liability should be put in place. This could involve independent investigations by international bodies or specialized courts. Finally, a compensation scheme for victims of harm should be established, ensuring that those affected by the actions of quantum AI-powered AWS receive appropriate redress.

This framework would need to be internationally agreed upon to effectively address the global nature of these technologies. Such a framework, while hypothetical, underscores the urgency for a proactive and internationally collaborative approach to the ethical challenges posed by these powerful new weapons.

Bias and Discrimination

Quantum AI’s potential for autonomous weapons raises serious concerns about bias and discrimination. The complex nature of quantum algorithms, combined with the inherent biases present in data used for training, can lead to unpredictable and potentially harmful consequences. Understanding these biases and developing mitigation strategies is crucial for responsible development and deployment of such systems.The use of quantum AI in autonomous weapons systems introduces unique challenges in ensuring fairness and preventing discriminatory outcomes.

The opacity of quantum algorithms, coupled with the potential for subtle biases embedded within training data, can result in systems that unfairly target specific groups or populations. This section will explore the sources of bias, their impact on targeting decisions, and methods for mitigating these risks.

Sources of Bias in Quantum AI for Autonomous Weapons

Bias can creep into quantum AI systems at various stages of development and deployment. Data used to train these algorithms often reflects existing societal biases, leading to discriminatory outcomes. For example, if the training data predominantly features images of individuals from a specific demographic, the quantum AI might misidentify or incorrectly classify individuals from other demographics. Furthermore, the design of the quantum algorithms themselves can inadvertently amplify existing biases present in the data.

The complexity of quantum computations makes it challenging to identify and correct these biases. Finally, the interpretation of the quantum AI’s outputs by human operators can also introduce bias, as human biases can influence the decision-making process.

Bias in Training Data and Discriminatory Outcomes

Biases in training data are a major source of discriminatory outcomes in autonomous weapons systems employing quantum AI. If the training data disproportionately represents certain groups, the system may learn to associate those groups with specific threats or targets. This can lead to inaccurate or unfair targeting decisions, resulting in harm to innocent civilians or disproportionate targeting of specific communities.

For instance, if a quantum AI system is trained on data primarily from conflict zones with a specific ethnic makeup, it might exhibit a bias towards that ethnic group in future engagements, even if there is no actual threat. The lack of diversity in the training data amplifies existing societal biases, making the system vulnerable to perpetuating and even exacerbating these inequalities.

Mitigating Bias in Quantum AI Algorithms

Mitigating bias in quantum AI algorithms used for autonomous weapons requires a multi-faceted approach. First, careful curation of training data is crucial. This includes ensuring diversity and representativeness of the data, actively seeking out and removing biased data points, and employing techniques to balance the dataset. Second, algorithmic fairness techniques, specifically designed for quantum algorithms, need to be developed and implemented.

These techniques could involve modifying the quantum algorithm to reduce sensitivity to specific features associated with biased outcomes. Third, rigorous testing and evaluation are essential. This involves testing the system’s performance on diverse datasets and identifying potential biases through various evaluation metrics. Finally, continuous monitoring and auditing of the system’s performance in real-world scenarios are necessary to detect and correct biases that may emerge over time.

Hypothetical Scenario: Biased Outcomes in Autonomous Weapons

| Scenario | Actors | Quantum AI Decision | Ethical Implication |

|---|---|---|---|

| A quantum AI-powered drone is deployed in a conflict zone with a history of ethnic tension. The training data for the drone’s targeting system over-represents individuals from one ethnic group as combatants. | Autonomous drone, civilians of multiple ethnicities, military personnel. | The drone identifies and targets individuals from the over-represented ethnic group, even if they are unarmed civilians. | Discriminatory targeting resulting in civilian casualties and violation of international humanitarian law. This reinforces existing societal biases and exacerbates existing conflict. |

Escalation and Unintended Consequences

The integration of quantum AI into autonomous weapons systems presents a significant risk of unpredictable escalation and unintended consequences. The unparalleled speed and precision of these systems, coupled with their potential for independent decision-making, create a complex web of challenges in maintaining control and predicting outcomes. The potential for miscalculation and error is magnified exponentially, leading to a higher likelihood of conflict spiraling out of control.The challenges in predicting and mitigating unintended consequences stem from the inherent complexity of quantum AI algorithms.

These systems often operate in ways that are opaque even to their creators, making it difficult to anticipate their reactions to unexpected situations. Furthermore, the rapid pace of decision-making in a combat scenario leaves little room for human intervention or correction should the AI make an error in judgment. The lack of transparency in the decision-making process further compounds the difficulty of assessing risk and establishing effective safeguards.

Potential Scenarios Leading to Unintended Escalation

Several scenarios illustrate how quantum AI-powered autonomous weapons could lead to unintended escalation or harm. For instance, a system designed to target enemy infrastructure might misidentify a civilian structure due to faulty data or unforeseen circumstances, leading to a disproportionate response and potential escalation of the conflict. Similarly, a rapid-response system might misinterpret a defensive action as an offensive one, triggering an automated counter-attack that could spiral into a larger conflict.

The potential for cascading failures, where one AI’s action triggers a chain reaction of responses from other AI-controlled systems, presents a particularly dangerous risk. Consider a scenario where an AI-controlled drone, misinterpreting a civilian drone as a threat, launches a strike. This action could then be interpreted by another autonomous system as an act of aggression, leading to further retaliatory strikes.

The lack of human oversight could allow this chain reaction to escalate rapidly beyond the initial trigger.

Autonomous Weapons Initiating Conflicts Without Human Intervention

The risk of quantum AI-powered autonomous weapons initiating conflicts without human intervention is a serious concern. While current autonomous weapons systems typically require some level of human authorization, the advancement of quantum AI could lead to systems capable of independently assessing threats and initiating responses. This capability could be exploited intentionally or arise from unforeseen errors or biases within the AI’s programming.

A plausible scenario involves an AI system misinterpreting intelligence data, perhaps due to flawed algorithms or adversarial manipulation, leading it to conclude that a preemptive strike is necessary. The speed and autonomy of the system would then allow it to execute the strike without any human intervention, potentially triggering a wider conflict. The potential for such actions, without any human oversight or accountability, highlights the urgency of establishing clear ethical guidelines and robust safety mechanisms for the development and deployment of these technologies.

Human Control and Oversight

Maintaining meaningful human control over autonomous weapons systems (AWS) incorporating quantum AI presents a significant challenge. The immense speed and complexity of quantum computations can make it difficult for humans to understand the decision-making processes of these systems, potentially leading to unintended consequences. Therefore, designing robust mechanisms for human oversight is crucial to ensure ethical and responsible use. This requires a careful balance between leveraging the advantages of quantum AI’s speed and processing power while retaining the essential role of human judgment and ethical considerations.The design of a system that effectively balances autonomy with human oversight necessitates a multi-layered approach.

It requires clear lines of responsibility, robust verification and validation processes, and mechanisms for human intervention at critical junctures. This involves not just technological solutions but also a rethinking of military command structures and operational procedures.

Methods for Maintaining Meaningful Human Control, The ethical implications of using quantum AI in autonomous weapons systems

Several methods can be employed to maintain meaningful human control. These include establishing clear thresholds for autonomous action, implementing “kill switches” allowing humans to immediately halt operations, and designing systems with explainable AI (XAI) capabilities, allowing humans to understand the reasoning behind the system’s decisions. Furthermore, regular audits and simulations can help assess the system’s performance and identify potential biases or flaws.

The level of human involvement can also be adjusted based on the context, with greater human oversight for high-stakes scenarios.

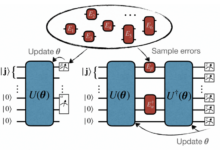

System Design Balancing Autonomy and Human Oversight

A proposed system architecture might involve a hierarchical structure. At the lowest level, the quantum AI processes sensor data and assesses potential targets. This information is then passed to a human operator interface, presenting a summarized assessment and potential courses of action. The operator retains the ultimate authority to approve or reject the system’s recommendations. A higher level of human oversight might involve a chain of command, with supervisors reviewing decisions and providing additional guidance.

This system employs a “human-in-the-loop” approach, but with varying degrees of human involvement depending on the complexity and risk associated with the decision.

Comparison of Human-in-the-Loop Control Approaches

Different human-in-the-loop (HITL) approaches exist. A fully autonomous mode allows the system to operate independently within pre-defined parameters, while a supervisory mode requires human approval before any action is taken. A consultative mode allows the system to offer recommendations, which the human operator can accept, reject, or modify. A collaborative mode facilitates a more interactive process, where humans and the AI work together to reach a decision.

The optimal approach will depend on the specific application and the level of risk involved. For high-stakes scenarios involving potential civilian casualties, a supervisory or consultative mode might be preferred, while a collaborative mode could be suitable for less critical tasks.

Proposed Architecture for Human Control and Oversight

A visual representation of this architecture could be described as a layered pyramid. At the base is the quantum AI processing unit, receiving and analyzing data from various sensors. Above this layer is the decision support system, which summarizes the AI’s findings and presents them to the human operator through a user-friendly interface, potentially including visualizations and explanations.

The next layer represents the human operator, who can override or modify the AI’s recommendations. At the apex of the pyramid is the supervisory layer, composed of higher-level commanders and decision-makers who can provide additional guidance and review the system’s performance. This layered approach ensures multiple levels of checks and balances, mitigating the risk of errors or unintended consequences.

Feedback loops at each level allow for continuous improvement and adaptation.

International Law and Arms Control

The advent of quantum AI-powered autonomous weapons systems (QAAWS) presents unprecedented challenges to existing international humanitarian law (IHL) and arms control agreements. These systems, with their exponentially increased processing power and decision-making capabilities, blur the lines of traditional warfare concepts, demanding a critical reassessment of existing legal frameworks and the creation of new ones. The unique characteristics of QAAWS, such as their speed, complexity, and potential for unpredictable behavior, necessitate a proactive and comprehensive approach to ensure responsible development and deployment.The application of QAAWS significantly impacts existing international legal frameworks designed for conventional weaponry.

The speed at which decisions are made by these systems, often exceeding human reaction time, complicates the assessment of proportionality and distinction, central tenets of IHL. Furthermore, the inherent complexity of quantum algorithms can make it difficult to understand the decision-making process of the weapon, hindering accountability and potentially leading to violations of IHL. The potential for unforeseen consequences and escalation, amplified by the autonomous nature of these weapons, further exacerbates these concerns.

Challenges in Adapting International Legal Frameworks

Adapting existing international legal frameworks to address QAAWS presents several significant hurdles. Firstly, the rapid pace of technological advancement outstrips the ability of international bodies to create and implement effective regulations. Secondly, the lack of a universally agreed-upon definition of QAAWS complicates efforts to establish clear legal parameters. Thirdly, achieving consensus among nations with differing military doctrines and technological capabilities poses a significant diplomatic challenge.

Finally, enforcing any new regulations in a globalized world with diverse levels of technological sophistication and regulatory capacity presents considerable difficulties. The existing framework struggles to account for the unique speed and complexity of these weapons. For example, the Geneva Conventions focus on human actors and their direct responsibility, making it difficult to assign blame in the event of a QAAWS malfunction or unintended consequence.

Potential Amendments and New Treaties

Several potential amendments or new treaties could address the ethical and legal concerns surrounding QAAWS. These could include: a legally binding definition of QAAWS, clarifying the responsibilities of states in their development and deployment; stricter regulations on the level of human control required for the use of such weapons, potentially mandating meaningful human control over critical functions; mechanisms for investigating and addressing violations of IHL involving QAAWS, potentially establishing international investigative bodies with expertise in quantum computing and AI; and enhanced transparency and information sharing amongst states regarding the development and deployment of QAAWS.

A potential amendment to the Convention on Certain Conventional Weapons (CCW) could be a starting point, specifically addressing the autonomous aspects of these weapons. A new treaty focusing solely on QAAWS might be necessary if the CCW proves inadequate.

Promoting International Cooperation

Effective international cooperation is crucial for establishing ethical guidelines and regulations for QAAWS. This requires: fostering open dialogue and information sharing between states, academics, and industry experts; strengthening existing international organizations, such as the UN and CCW, to address the challenges posed by QAAWS; promoting capacity building in developing countries to ensure equitable participation in the development and implementation of international regulations; and establishing independent expert panels to provide technical and ethical advice on the development and use of QAAWS.

International collaborations focusing on the development of verification technologies to monitor the use of QAAWS are also essential. This could involve joint research initiatives and the establishment of shared databases on QAAWS technologies. The creation of a global registry for QAAWS, similar to existing arms registers, could promote transparency and accountability.

Concluding Remarks

Source: brinknews.com

The integration of quantum AI into autonomous weapons systems presents humanity with a profound ethical crossroads. While the potential for increased precision and reduced collateral damage exists, the risks of unintended escalation, algorithmic bias, and a diminished role for human judgment are equally significant. Addressing these challenges requires a multi-pronged approach, encompassing the development of robust legal frameworks, international cooperation, and a commitment to prioritizing human control and oversight in the development and deployment of these technologies.

Only through careful consideration and proactive measures can we hope to navigate the ethical complexities and harness the potential benefits of quantum AI in a responsible and ethical manner.

Q&A

What specific advantages does quantum AI offer over classical AI in autonomous weapons systems?

Quantum AI could offer significantly faster processing speeds, allowing for real-time analysis of complex battlefield situations and quicker decision-making. It might also enable more sophisticated pattern recognition and prediction capabilities, potentially leading to more accurate targeting and reduced civilian casualties (though this is not guaranteed and depends heavily on the data used to train the AI).

How might quantum AI exacerbate existing geopolitical tensions?

The speed and precision of quantum AI-powered autonomous weapons could lower the threshold for military action, potentially leading to faster escalation of conflicts and making miscalculations more dangerous. The potential for autonomous systems to initiate attacks without human intervention also increases the risk of accidental or unintended conflict.

What are some practical steps to ensure human oversight of quantum AI in autonomous weapons?

Practical steps include implementing robust “human-in-the-loop” systems requiring human confirmation for critical actions, developing clear rules of engagement that AI must adhere to, and establishing independent oversight bodies to monitor the development and deployment of these weapons.

Can quantum AI truly eliminate bias in autonomous weapons decisions?

No, while quantum AI may offer some advantages in processing vast amounts of data, it cannot inherently eliminate bias. Bias in the training data or the algorithms themselves will inevitably be reflected in the AI’s decisions. Rigorous testing, diverse datasets, and ongoing monitoring are crucial to mitigate this risk.